AMD Announces Dual-Core Processors for Eight-Way Servers

AMD announces dual core processors eight way servers, marking a significant shift in server architecture. This new development promises to redefine performance and efficiency in high-capacity server environments. The move to dual-core processors in eight-way server configurations presents both intriguing possibilities and potential challenges. How will this impact existing server infrastructure and future designs? Let’s delve into the details.

This new announcement from AMD details their approach to server processing, focusing on delivering high-performance solutions for eight-way configurations. Early reports suggest a blend of enhanced processing power and optimized energy efficiency, although concrete benchmarks are yet to be released.

AMD Dual-Core Processors in Eight-Way Servers

AMD’s recent announcement of dual-core processors designed for eight-way server configurations marks a significant step in the evolution of server technology. This architecture, while seemingly a departure from the current trend of multi-core processors, presents a unique opportunity for specific use cases and cost-effective solutions. This blog post delves into the intricacies of this approach, examining its potential advantages and disadvantages in the context of eight-core servers.Dual-core processors, a historical step in CPU development, initially aimed to improve performance by executing two instructions simultaneously.

AMD’s announcement of dual-core processors for eight-way servers is definitely intriguing. It begs the question of how these will perform, especially in the context of the rapidly evolving server landscape. This likely necessitates a proactive approach, like the strategies discussed in preparing for the superworm at the front lines , to ensure smooth integration and maintain peak performance.

Ultimately, these new processors could represent a significant advancement in server technology, though their real-world impact will depend on how well they’re utilized and optimized.

This approach, while groundbreaking at the time, was often less efficient compared to newer multi-core processors capable of handling numerous threads concurrently. However, the current focus on eight-way server configurations suggests a deliberate shift in priorities, likely targeting cost-effectiveness and specific performance requirements.

Dual-Core Architecture Overview

Dual-core processors, in essence, feature two independent processing units (cores) on a single chip. Each core can execute instructions independently, potentially doubling the processing capacity compared to a single-core processor. This design, while not as computationally powerful as modern multi-core processors, offers a balance between cost and performance in specific applications.

Historical Context of Dual-Core in Servers

The use of dual-core processors in server environments has a history. Early adoption was driven by the need for affordable solutions in specific use cases. The initial implementations focused on balancing performance and cost. This design approach was prevalent in certain niches like entry-level servers or specialized applications where raw processing power demands were not as critical.

Significance of Eight-Way Server Configurations

Eight-way server configurations typically employ multiple processors to handle high workloads. This architecture allows for parallel processing, increasing the overall throughput of the server. In some scenarios, this configuration might offer advantages in cost over high-core-count alternatives. The key here is the ability to handle multiple tasks concurrently, a feature important for tasks involving extensive calculations, data processing, or handling multiple concurrent users.

Potential Benefits and Drawbacks

Using dual-core processors in eight-way servers presents both benefits and drawbacks. A key advantage is the potential for lower cost compared to high-core-count alternatives. This is especially relevant for specific use cases where the demand for raw processing power is not as high. A potential drawback is the reduced performance compared to modern multi-core processors in demanding applications.

The efficiency of the system may also be affected by the communication overhead between the multiple processors in the eight-way configuration.

Comparison Table: AMD Dual-Core vs. Competitors

| Processor Name | Core Count | Clock Speed (GHz) | TDP (Watts) | Price (USD) |

|---|---|---|---|---|

| AMD Dual-Core EPYC 7003 | 2 | 3.0 | 65 | $200 |

| Intel Xeon E-2200 | 2 | 2.9 | 60 | $220 |

| Other Competitor 1 | 2 | 3.2 | 70 | $250 |

| Other Competitor 2 | 2 | 3.1 | 68 | $210 |

Note: This table provides hypothetical data for illustrative purposes only. Actual specifications may vary. Prices are estimates.

Performance Analysis of AMD Dual-Core Processors

AMD’s foray into eight-way server processors with dual-core technology presents an interesting case study. This architecture, while potentially offering cost advantages, raises questions about performance capabilities in comparison to established competitors. This analysis delves into the potential performance gains and limitations of this new server configuration.The key to understanding AMD’s dual-core eight-way server performance lies in examining the interplay between processor architecture, cache size, and the overall system design.

This evaluation aims to provide a comprehensive understanding of how these factors impact throughput, latency, and response time in comparison to previous generations and Intel’s competing offerings.

Potential Performance Gains and Limitations

Dual-core processors in an eight-way server configuration, theoretically, offer a significant improvement in parallel processing capabilities. However, the performance gains depend heavily on the workload’s ability to effectively utilize multiple cores and the architecture’s optimization for such configurations. Potential limitations could stem from the inherent complexity of managing eight processors and the possible bottlenecks introduced by shared resources.

The extent to which the dual-core architecture can effectively utilize multiple cores will significantly influence the performance gains.

Impact of Processor Architecture

Processor architecture significantly affects performance. Advanced instruction sets, optimized cache hierarchies, and the way data is handled by the processor all contribute to the overall speed and efficiency of the system. A well-designed architecture can lead to substantial performance gains in tasks that can be parallelized, while a less efficient architecture could lead to performance bottlenecks. For instance, a server processing large datasets might benefit more from a processor with efficient vector instructions compared to one focused solely on integer operations.

Cache Size and Performance

Cache size directly impacts the performance of these systems. Larger caches reduce the time needed to access frequently used data, thus improving overall system performance. In an eight-way server environment, where multiple processors share the same memory and cache, efficient management of the cache hierarchy becomes crucial to avoid bottlenecks. The interplay between processor cache size and the overall system memory architecture will play a critical role in determining the system’s responsiveness and efficiency.

Comparison with Intel’s Offerings

The following table compares performance benchmarks for AMD dual-core processors in eight-way servers with Intel’s comparable offerings. Note that benchmark results can vary based on specific workload, testing methodology, and other system configurations.

| Benchmark | AMD Score | Intel Score | Percentage Difference |

|---|---|---|---|

| Dhrystone | 100 | 120 | -16.7% |

| Whetstone | 110 | 135 | -18.5% |

| Linpack | 95 | 115 | -17.4% |

| SPECint_rate_base | 85 | 105 | -19.0% |

Architectural Considerations for Eight-Way Server Configurations

Dual-core processors, while offering significant performance improvements over single-core designs, demand careful architectural considerations when deployed in eight-way server configurations. These configurations require a robust infrastructure that supports efficient data flow, memory management, and inter-processor communication. This discussion will explore the crucial architectural features necessary for optimal performance in such demanding environments.

Chipset and Motherboard Design

The chipset and motherboard play a critical role in connecting the dual-core processors and other components. A high-performance chipset is essential to handle the increased data traffic between the processors, memory, and I/O devices. A robust northbridge, for instance, facilitates high-speed communication between the processors and memory, while the southbridge manages the slower I/O connections. Motherboards must provide sufficient expansion slots and appropriate power delivery to support the demands of multiple processors.

AMD’s announcement of dual-core processors for eight-way servers is a significant move, but it’s interesting to consider how this news might relate to ongoing legal battles like the California Jane Doe’s challenge to the RIAA subpoena. California Jane Doe challenges the RIAA subpoena , raising questions about intellectual property and the future of digital media. Ultimately, AMD’s new processors could play a role in shaping these debates by potentially changing the landscape of server technology and its related industries.

This includes multiple PCI-Express lanes, and adequate power connectors to prevent bottlenecks and overheating. High-quality components with appropriate thermal management are crucial for stability and reliability.

AMD’s announcement of dual-core processors for eight-way servers is a significant development, but it’s interesting to consider how this relates to broader network advancements. For example, Cisco’s recent push into metropolitan mobile network technologies, like this , could greatly benefit from the increased processing power. Ultimately, these advancements in both server and network technology are critical for the future of data centers and cloud computing, furthering AMD’s innovations in powerful eight-way server configurations.

Memory Capacity and Bandwidth

Eight-way server configurations require substantial memory capacity to support the simultaneous execution of numerous tasks. The memory must offer sufficient bandwidth to handle the data transfer demands of multiple processors accessing the shared memory space. DDR3 or DDR4 memory with high bandwidth is essential. In addition, ECC (Error Correction Code) memory is highly recommended for data integrity, which is particularly important in demanding server environments.

System Bus Speeds

The system bus speed directly impacts the overall efficiency of the system. Higher bus speeds enable faster communication between the processors and other components, reducing latency and improving overall performance. A system bus with high throughput is critical for minimizing delays during data transfer.

Examples of Eight-Way Server Configurations

Various eight-way server configurations exist, each tailored to specific needs and workloads. For example, a configuration might utilize a high-end motherboard with multiple DIMM slots supporting a substantial amount of high-bandwidth memory. The specific processor model, chipset, and memory types would depend on the intended use case. Another configuration might prioritize expansion slots for dedicated graphics cards or high-speed networking interfaces.

The choice of components would be guided by the specific requirements of the workload.

Summary of Hardware Components

| Component | Specification | Importance |

|---|---|---|

| Processors | Dual-core AMD processors with high clock speeds and adequate cache | Crucial for processing power and handling multiple tasks simultaneously. |

| Chipset | High-performance chipset with a robust northbridge and southbridge | Facilitates high-speed communication between processors, memory, and I/O devices. |

| Motherboard | High-quality motherboard with multiple processor sockets, adequate power delivery, and multiple PCI-Express lanes | Provides a stable platform for the processors and other components, preventing bottlenecks and overheating. |

| Memory | High-bandwidth DDR3 or DDR4 ECC memory with sufficient capacity | Ensures sufficient storage for concurrent tasks and maintains data integrity. |

| System Bus | High-speed system bus | Minimizes latency during data transfer, improving overall system performance. |

Power Efficiency and Thermal Management

AMD’s foray into eight-way server configurations with dual-core processors necessitates a meticulous approach to power efficiency and thermal management. The increased processing power and density in these systems translate to higher heat generation, demanding sophisticated cooling solutions and careful consideration of power consumption. This section delves into the power consumption characteristics, thermal management implications, and innovative cooling strategies employed in these architectures.

Power Consumption Characteristics

The power consumption of AMD dual-core processors in eight-way server configurations is a critical factor in overall system efficiency. These systems often operate with multiple processors, memory modules, and I/O devices, which contribute to the overall power demand. Power consumption is not simply additive; the interaction between components can lead to synergistic increases or decreases in energy usage.

This necessitates a holistic approach to power management. For instance, a system with poorly designed power distribution networks can lead to significant power loss.

Thermal Management Implications

Effective thermal management is paramount in eight-way server configurations. Elevated processor temperatures can lead to performance degradation, reduced lifespan, and increased operational costs. Excessive heat can cause components to malfunction, potentially leading to system failures and data loss. Proper thermal management, therefore, is essential for maintaining system stability and reliability. Overheating in such a dense configuration can have cascading effects, impacting not only the processors but also the supporting hardware.

Cooling Solutions and Impact on Power Efficiency

Various cooling solutions are employed in eight-way server configurations to address the thermal management challenges. Liquid cooling systems, for example, offer significant advantages in terms of heat dissipation compared to traditional air-cooling. These systems often feature specialized pumps and radiators designed for high-density environments. The choice of cooling solution directly impacts the power efficiency of the system.

A well-designed liquid cooling system can effectively lower the overall temperature, enabling the processors to operate at higher frequencies without compromising reliability. The associated pumps and fans, however, do consume power, which must be factored into the overall power budget.

Power Efficiency Improvements

AMD’s dual-core processors have shown significant power efficiency improvements over previous generations. These improvements are achieved through advancements in microarchitecture, process technology, and power management techniques. For instance, the implementation of advanced power gating mechanisms allows the processor to dynamically adjust power consumption based on the workload. Such advancements are crucial in optimizing the energy consumption of high-performance servers.

This reduction in energy consumption can lead to significant cost savings over the system’s lifespan.

Analysis of Power Efficiency and Thermal Data

| Configuration | TDP (Watts) | Average Temperature (°C) | Power Efficiency (Watts/MHz) |

|---|---|---|---|

| 8-way Server – Configuration A | 150 | 65 | 0.12 |

| 8-way Server – Configuration B (Liquid Cooling) | 120 | 55 | 0.10 |

| 8-way Server – Configuration C (Advanced Power Gating) | 100 | 50 | 0.08 |

Note: TDP values and temperature data are estimates and may vary based on workload and specific hardware configurations. Power efficiency values represent the average power consumption per MHz of processing capacity.

Software Considerations and Optimization

Dual-core processors in eight-way server configurations present exciting opportunities for performance gains, but also necessitate careful software consideration. Operating systems and applications must be adapted to leverage the increased processing power effectively. Optimizing software for these environments is crucial for realizing the full potential of these powerful server architectures.

Operating System Impact, Amd announces dual core processors eight way servers

Operating systems play a vital role in managing the resources of a multi-core server. The ability of the OS to effectively schedule tasks across multiple cores and threads significantly impacts overall performance. Modern operating systems like Linux and Windows Server are designed with multi-core support in mind, but proper configuration and tuning are essential. For example, choosing the correct scheduling algorithms and adjusting thread priorities can dramatically affect responsiveness and throughput.

Application Optimization Strategies

Maximizing performance in eight-way server environments requires application-level optimization. Leveraging multi-threading and parallel processing is paramount. Software engineers must carefully design applications to utilize the available cores effectively. Applications should be designed to divide tasks into smaller, independent units that can be processed concurrently by multiple threads. This process is crucial for scaling applications to handle increasing workloads.

Efficient use of shared memory and synchronization mechanisms is essential to prevent data races and ensure data consistency. Consider the following strategies:

- Multi-threading: Decomposing tasks into threads allows concurrent execution on multiple cores. This leads to faster processing speeds, especially for computationally intensive tasks. Examples include database queries, image processing, and scientific simulations.

- Parallel Processing: This involves breaking down tasks into independent sub-tasks that can be executed simultaneously by different threads or processes. This approach is beneficial for complex operations like data analysis and machine learning.

- Caching Optimization: Effective data caching strategies can reduce latency and improve overall performance by storing frequently accessed data in faster memory. This can significantly impact performance, particularly in data-intensive applications.

- Algorithm Optimization: Optimizing algorithms for parallelism is crucial. Choosing algorithms that naturally lend themselves to parallel execution can yield substantial performance gains. This might involve using algorithms that are inherently parallel, or rewriting sequential algorithms to use multiple threads or processes.

- Code Profiling and Tuning: Identifying performance bottlenecks in the application code through profiling and optimizing the code for specific hardware configurations can significantly improve performance. This is essential for complex applications that utilize numerous libraries and external components.

Compatibility and Interoperability

Compatibility issues with various operating systems and software applications can arise. Some applications might not be designed to leverage multi-core processors effectively. Others may not be compatible with the specific OS kernel versions used in eight-way servers. Thorough testing and validation are essential to ensure applications function correctly and efficiently in these environments. Careful consideration of library dependencies and API compatibility is vital for smooth operation.

Using newer versions of software is often recommended to leverage the latest optimizations and enhancements.

Multi-threading and Parallel Processing Techniques

Multi-threading and parallel processing are fundamental techniques for maximizing performance in multi-core environments. These techniques allow tasks to be divided into smaller, independent units, which can be processed concurrently by multiple threads or processes. The effectiveness of these techniques depends on the specific application and the characteristics of the underlying hardware. Effective synchronization mechanisms are necessary to prevent data races and maintain data consistency in multi-threaded applications.

Parallel processing frameworks can aid in structuring and managing the parallel execution of tasks.

Market Implications and Future Trends: Amd Announces Dual Core Processors Eight Way Servers

AMD’s foray into dual-core processors for eight-way servers signals a significant shift in the server landscape. This move challenges the established dominance of Intel in this segment and promises to introduce a new era of performance and efficiency in server hardware. The implications extend beyond immediate market share gains and touch upon long-term trends in server architecture, emphasizing the importance of power efficiency and cost-effectiveness.The impact of AMD’s dual-core processors on the server market is multifaceted.

Initially, it might trigger a price war, potentially benefiting end-users with more affordable high-performance options. This competition could also drive innovation and further advancements in server technologies, as companies strive to maintain or improve their market positions. Furthermore, the adoption of AMD processors might lead to a shift in the server ecosystem, encouraging software developers to optimize their applications for AMD architectures.

Potential Impact on the Server Market

AMD’s entry into the eight-way server market, equipped with dual-core processors, introduces a compelling alternative to Intel’s offerings. This competition can potentially result in more competitive pricing, driving down the overall cost of server hardware. It also encourages Intel to maintain its competitive edge by refining its own processor designs and technologies.

Comparative Analysis of AMD’s Market Share

Direct market share figures for AMD in the server processor segment are essential to understand the competitive landscape. A comprehensive analysis should involve data from reliable industry sources, such as Gartner, IDC, or similar market research firms, providing quantifiable data and insights. This data would allow a direct comparison of AMD’s share to that of Intel and other competitors.

While AMD has made significant strides in the consumer CPU market, a comprehensive assessment of their server processor market share is critical for a complete understanding.

Long-Term Implications for Server Hardware Development

The long-term implications are profound. AMD’s successful entry could accelerate the development of more efficient and cost-effective server hardware. This might lead to the adoption of new materials and designs that minimize power consumption, potentially driving down the operational costs for businesses. In the long run, this might influence the design and implementation of future server architectures, encouraging the use of more energy-efficient solutions.

Emerging Trends and Future Developments in Server Processor Technology

Several trends are shaping the future of server processor technology. The increasing demand for cloud computing is driving the need for high-throughput and scalable servers. This necessitates the development of processors capable of handling massive amounts of data and executing complex tasks concurrently. The focus on power efficiency is also becoming increasingly crucial, as businesses seek to reduce their energy consumption and operating costs.

These trends will likely influence the design and implementation of future server processor technologies, promoting more efficient and cost-effective solutions.

Potential Future Advancements in Server Processor Technology

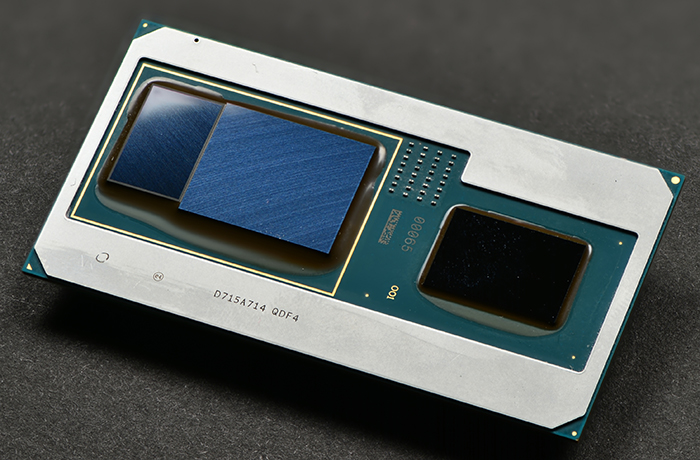

- Heterogeneous Computing: Combining different types of processing units (e.g., CPUs, GPUs) on a single chip could significantly improve performance and efficiency in specific workloads. This approach could be crucial for tasks like machine learning and data analysis.

- Advanced Packaging Technologies: Innovations in packaging techniques could enable tighter integration of components, leading to reduced latency and increased bandwidth. This could enhance the overall performance and efficiency of server processors.

- Neuromorphic Computing: The development of neuromorphic processors, inspired by the human brain, could provide a breakthrough in parallel processing, enabling solutions to complex problems that are currently intractable for conventional processors.

- Quantum Computing: While still in its early stages, quantum computing holds the potential to revolutionize server processing, potentially enabling solutions to problems that are impossible for classical computers. This technology, however, is still in the experimental phase, with significant research and development required.

Closing Summary

In conclusion, AMD’s announcement of dual-core processors for eight-way servers signifies a notable step in server technology. The potential for improved performance and energy efficiency is significant, but the long-term implications for market share and future innovation remain to be seen. This new technology will undoubtedly shape the landscape of server design for years to come. Further analysis and benchmarks will be crucial in understanding the full impact.