IBM Puts Blue Gene on the Shelf Legacy and Impact

IBM puts blue genes on the shelf, signaling a significant shift in the supercomputing landscape. This decision marks the end of an era, prompting a look back at the project’s history, innovations, and impact. The article delves into the reasons behind this move, exploring potential shifts in computing priorities, financial constraints, and the emergence of newer technologies. It also considers the implications for high-performance computing, scientific research, and related industries.

Finally, it investigates alternative uses for the Blue Gene technology and assesses its legacy in the broader context of supercomputing.

The Blue Gene project, a pioneering effort in supercomputing, initially aimed to create powerful machines capable of tackling complex scientific challenges. Key technological advancements and milestones will be examined. This article also explores the potential repurposing of the technology for various applications outside of high-performance computing.

Background of IBM’s Blue Gene Project

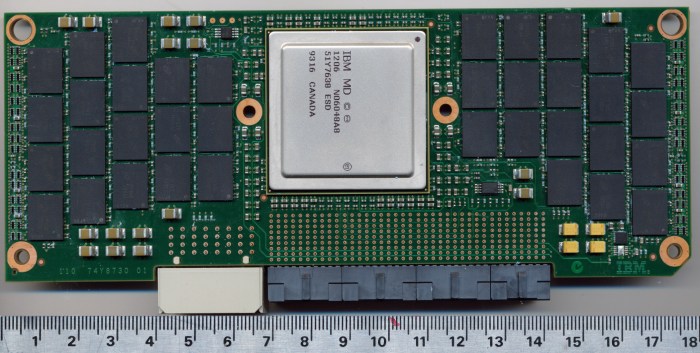

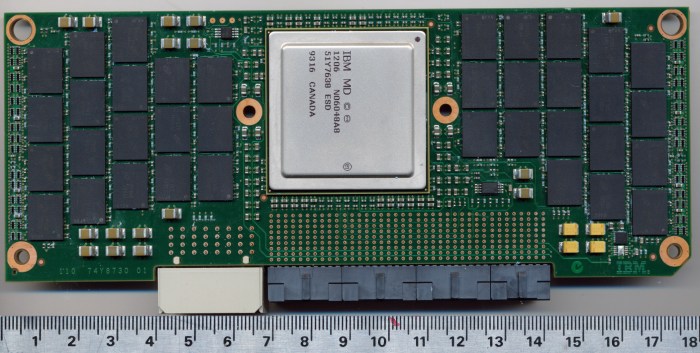

The IBM Blue Gene project stands as a landmark achievement in the field of supercomputing, pushing the boundaries of parallel processing and computational power. It wasn’t simply a collection of faster processors; it represented a complete reimagining of how to build and utilize massively parallel systems. This project had a significant impact on the future of scientific research and technological advancement.The Blue Gene project aimed to create a highly scalable and powerful supercomputer capable of tackling complex scientific problems.

Its genesis was rooted in the need for increased computational resources to address challenges in areas like genomics, climate modeling, and materials science. The project’s ambitious goals, combined with its innovative approach, resulted in a powerful legacy.

Project Origins and Goals

The Blue Gene project was initiated by IBM in the early 2000s. The project’s core objective was to develop a massively parallel computer architecture capable of achieving unprecedented computational performance. This ambition was driven by the increasing complexity of scientific problems demanding higher levels of processing power than traditional computers could provide. The project’s initial design envisioned a system that could handle trillions of calculations per second, significantly surpassing the capabilities of existing supercomputers.

Technological Advancements

The Blue Gene project introduced several key technological advancements. A defining feature was its innovative approach to massively parallel processing. It utilized a custom-designed architecture that optimized communication between processors, reducing latency and maximizing overall efficiency. This architectural innovation enabled the handling of vast datasets and complex algorithms that were previously intractable. The project also pioneered new methods for managing and coordinating the massive number of processors, significantly improving system reliability and scalability.

Timeline of Milestones

- 2001-2004: Initial design and development phases focused on creating a novel architecture for massively parallel computing. This involved designing the hardware, optimizing the software stack, and defining the core system components. The emphasis was on creating a highly scalable and reliable system capable of handling an extremely large number of processors.

- 2004-2008: Construction and testing of the initial Blue Gene/L system. This stage was characterized by significant engineering challenges, including the need to manage the complex interactions between thousands of processors. This period saw the successful implementation of the core architectural design, paving the way for future generations of Blue Gene systems.

- 2008-2012: Development and deployment of subsequent Blue Gene/P systems. These systems represented advancements in performance and scalability, reflecting ongoing research and development in hardware and software technologies. This period focused on leveraging the knowledge gained from the previous generation of systems to create more powerful and efficient architectures.

Applications and Uses

The Blue Gene supercomputers found applications in a variety of scientific fields. In genomics, they were used to analyze massive datasets generated by genome sequencing projects, leading to breakthroughs in understanding human genetics and disease. In climate modeling, they provided the computational power needed for complex simulations of weather patterns and climate change. Furthermore, Blue Gene systems were used to study materials science, allowing scientists to simulate the behavior of materials under extreme conditions and accelerate the discovery of new materials with desired properties.

IBM’s shelving of Blue Genes is certainly a significant move, but it’s interesting to see how the whole situation relates to the recent legal wrangling between peer-to-peer (P2P) networks and the RIAA, who are alleging patent infringement. This, as detailed in p2ps turn tables on riaa allege patent infringement , highlights a potential shift in power dynamics, potentially affecting the future of digital music distribution.

Ultimately, IBM’s decision might be a calculated response to these changing industry landscapes.

Reasons for “Shelving” the Blue Gene Project

The Blue Gene project, a significant undertaking in high-performance computing, ultimately saw its development and support curtailed. This decision wasn’t a simple “shelving” but rather a strategic shift in priorities, acknowledging the evolving landscape of computing and the inherent challenges in large-scale, specialized projects. Understanding the reasons behind this decision provides valuable insights into the dynamics of technological advancement and resource allocation in the industry.

Potential Shifts in Computing Priorities

The computing landscape constantly evolves. Emerging technologies, like specialized accelerators and cloud-based computing, often offer more cost-effective and flexible solutions for specific computational needs. The increasing accessibility of powerful cloud platforms, coupled with advancements in specialized hardware, might have made the large-scale, dedicated infrastructure of the Blue Gene project less appealing for certain research and development applications. Furthermore, the focus on broader computing architectures, with a focus on adaptability and flexibility, could have been a factor in choosing alternative strategies.

IBM’s shelving of Blue Gene seems like a significant move, potentially signaling a shift in focus. This might be linked to Microsoft’s recent discussions on upgrading Windows update, as seen in microsofts steve anderson on upgrading windows update. Ultimately, IBM’s decision raises questions about the future of high-performance computing and whether the company will pursue different strategies in this space.

Emerging Technologies and Their Impact

The rise of graphics processing units (GPUs) as powerful accelerators for parallel computations, and the concurrent development of cloud-based infrastructure, represented significant advancements. These technologies offered a potentially more accessible and adaptable approach to achieving high-performance computing compared to the specialized, often bespoke, nature of the Blue Gene architecture. The development of these alternative approaches may have rendered the Blue Gene project less competitive and efficient for many research endeavors.

IBM’s shelving of the Blue Gene project is a bit of a head-scratcher, but it might not be as surprising as you think. High-performance computing is crucial in many industries, and Formula One racing, for example, is heavily reliant on cutting-edge tech. Companies like those involved in formula one racing and high tech companies often push the boundaries of what’s possible in areas like aerodynamics and engine design.

Perhaps IBM is shifting resources to more immediately profitable ventures, or maybe they see a different, less visible future for this type of computing. Either way, the decision highlights the constant evolution in the tech landscape, and the ongoing race to innovate.

Financial Constraints and Market Factors, Ibm puts blue genes on the shelf

Large-scale projects like Blue Gene require substantial financial investment in research, development, and infrastructure. Maintaining the project’s support over an extended period, alongside the potential return on investment, could have become a significant challenge. Market forces also play a crucial role. If the project’s capabilities did not align with the current or anticipated market demand, or if more cost-effective alternatives became available, it could have led to a strategic decision to reallocate resources.

Comparison with Contemporary Computing Projects

Comparing the Blue Gene project to other contemporary computing projects reveals differing approaches to achieving high-performance computing. While Blue Gene focused on a highly specialized architecture for specific scientific tasks, other projects may have prioritized broader applicability or more cost-effective solutions. The competitive landscape and the evolving priorities of the research community could have played a critical role in influencing the decision-making process.

Influence on Newer Technologies

Despite the shelving of the Blue Gene project, its advancements significantly influenced the development of subsequent technologies. The architecture’s innovative design in high-performance computing contributed to the understanding of parallel processing, paving the way for improvements in GPU design and the development of cloud-based computing platforms. The lessons learned from the Blue Gene project, regarding the management and optimization of massively parallel systems, have directly or indirectly influenced contemporary research and development in high-performance computing.

Implications and Impact of the Decision: Ibm Puts Blue Genes On The Shelf

The decision to “shelf” IBM’s Blue Gene project has significant implications for the future of high-performance computing and scientific research. While seemingly a setback, the decision also presents opportunities for other initiatives and potentially accelerates advancements in other areas of computing. This shift necessitates a careful analysis of the potential effects on various stakeholders and industries.

Potential Effects on the Broader Field of High-Performance Computing

The Blue Gene project, despite its shelving, has left a lasting impact on the field of high-performance computing. Its innovative architecture and advancements in parallel processing have influenced subsequent generations of supercomputers. The project’s legacy will likely continue to inspire future research and development in this area. However, the absence of continued development and refinement on the Blue Gene platform could lead to a stagnation in certain aspects of high-performance computing, particularly in specific niche applications.

Potential Impact on Scientific Research and Development

The Blue Gene project held the potential to accelerate scientific breakthroughs in various fields. Its capabilities could have been crucial for tackling complex problems in genomics, climate modeling, materials science, and more. The decision to shelve the project might hinder progress in these areas, particularly those that heavily rely on large-scale simulations. Scientists may need to explore alternative platforms and strategies to achieve their research objectives.

The absence of a dedicated, high-performance computing platform focused on the Blue Gene architecture could affect research outcomes.

Examples of Potential Research Areas Affected

The potential research areas affected by the shelving of the Blue Gene project include, but are not limited to, drug discovery and development. The project’s computational capabilities could have significantly accelerated the process of designing and testing new drugs and therapies. Similarly, climate modeling could also suffer from the lack of continued investment in this technology. Accurate climate models require immense computational power to simulate complex atmospheric and oceanic interactions.

Furthermore, research in materials science, focusing on the properties and behavior of new materials, may face challenges due to the limitations of available high-performance computing platforms.

Potential Implications on Related Industries or Fields of Study

The shelving of the Blue Gene project may impact industries directly reliant on high-performance computing, including aerospace engineering and financial modeling. Aerospace simulations and complex financial models often require significant computational resources, and the lack of further development in Blue Gene may hinder their advancement. The impact on related fields like bioinformatics and genomics research could be substantial, affecting the pace of discoveries and innovations in these areas.

Summary Table of Impact on Computing Industry Segments

| Category | Positive Impact | Negative Impact | Neutral Impact |

|---|---|---|---|

| High-Performance Computing (HPC) | Potential for alternative architectures to emerge and fill gaps left by Blue Gene | Potential stagnation in certain HPC sub-domains | No direct impact on overall HPC trends in the short term |

| Scientific Research | Potential for the development of new and innovative methods to tackle complex problems | Potential delays in research outcomes for projects relying heavily on Blue Gene-like architectures | No significant impact on areas that don’t rely heavily on large-scale simulations |

| Related Industries | Potential for the development of new tools and methodologies in fields like financial modeling and aerospace engineering | Potential slowdown in innovation and advancements in industries heavily reliant on Blue Gene-like capabilities | No significant impact on industries not directly using Blue Gene technology |

Alternative Uses of Blue Gene Technology

The shelving of the IBM Blue Gene project, while seemingly a setback for high-performance computing, opens doors for exploring alternative applications of its innovative technology. The Blue Gene’s unique architecture, designed for massive parallelism, possesses inherent capabilities that extend beyond its original purpose. This exploration examines potential repurposing in diverse fields, emphasizing how the underlying architecture and components can be adapted for new challenges.

Potential Applications in Data Analytics

The Blue Gene’s parallel processing power, initially optimized for complex scientific simulations, is highly suitable for accelerating data analysis tasks. Massive datasets generated in fields like genomics, finance, and social sciences require sophisticated algorithms for pattern recognition and insights. The Blue Gene’s architecture, with its extensive interconnects and ability to handle large-scale computations, can be leveraged for optimized algorithms for tasks like machine learning, data mining, and statistical analysis.

This allows for faster processing and potentially more accurate insights from the vast amounts of data being generated today.

Specialized Simulations in Diverse Fields

The Blue Gene’s architecture, while initially focused on scientific simulations, can be adapted for specialized simulations in other domains. For example, the intricate interactions within complex biological systems, such as protein folding or drug interactions, could benefit from the Blue Gene’s computational power. The high-precision and massively parallel capabilities are also applicable to financial modeling, allowing for sophisticated simulations of market behavior and risk assessment.

New Industries and Applications

The Blue Gene technology’s adaptability could open up new possibilities in industries beyond traditional scientific and technological sectors. Imagine a scenario where pharmaceutical companies leverage Blue Gene to simulate drug interactions with biological systems at an unprecedented level of detail, potentially accelerating drug discovery. Financial institutions could utilize its capabilities to simulate market scenarios and develop more robust risk management strategies.

The possibilities are as vast as the data and problems requiring complex solutions.

Table of Potential Alternative Uses

| Field | Application Details | Challenges | Success Factors |

|---|---|---|---|

| Drug Discovery | Simulating drug interactions with biological systems at high precision, potentially accelerating drug development and reducing trial and error. | Developing algorithms and models to accurately represent complex biological processes, ensuring the data generated by the simulations are credible and reliable. | Collaboration between computer scientists, biologists, and pharmaceutical researchers; development of robust simulation models that incorporate experimental data. |

| Financial Modeling | Simulating market scenarios, identifying potential risks, and developing more robust risk management strategies. | Accurately modeling market behavior, considering factors like human behavior and unexpected events. Ensuring the model can handle complex and dynamic market conditions. | Access to real-time market data, integration with existing financial models, validation through historical market data, and ongoing monitoring and refinement. |

| Materials Science | Simulating the behavior of materials under extreme conditions, leading to the development of new materials with improved properties. | Accurately modeling the complex interactions between atoms and molecules in materials, ensuring the models capture the relevant physical phenomena. | Interdisciplinary collaboration between material scientists and computer scientists, development of validated simulation models, access to high-quality material data. |

| Climate Modeling | Simulating climate change impacts, predicting future scenarios, and developing strategies for mitigating environmental risks. | Accurately incorporating complex climate variables, including feedback loops, and handling uncertainties inherent in climate models. | Collaboration between climate scientists and computer scientists, integration with existing climate models, validation against historical climate data. |

Legacy and Future of Supercomputing

The IBM Blue Gene project, while ultimately “shelved” in its original form, leaves an indelible mark on the history of supercomputing. Its innovative architecture and groundbreaking performance benchmarks, despite the decision to halt further development of the project, have profoundly influenced the field. Understanding its legacy requires examining its impact on the broader landscape of supercomputing and the enduring trends shaping the future of this crucial technology.The Blue Gene project represents a pivotal moment in the evolution of supercomputers, showcasing the potential of massively parallel architectures.

Its focus on scalability and efficiency, while initially driven by a specific research agenda, laid the groundwork for future generations of high-performance computing systems. This legacy is not merely historical; it directly informs contemporary approaches to designing and deploying powerful computing resources.

Blue Gene’s Contribution to Supercomputing History

The Blue Gene project’s contributions to supercomputing are multifaceted. It significantly advanced the understanding of parallel processing and demonstrated the feasibility of building extremely large-scale systems. Its innovative architecture, emphasizing modularity and interconnection, influenced the design of subsequent supercomputers. Furthermore, the project spurred advancements in software development tools and methodologies required to manage and utilize such complex systems.

Comparison to Other Notable Supercomputer Projects

The Blue Gene project is comparable to other pioneering supercomputer projects like the Cray supercomputers and the ASCI Red and Blue projects. All represent significant leaps in technological capability and often serve as benchmarks for subsequent generations of machines. A crucial difference lies in the architectural approach. While some focused on high clock speeds and optimized individual processors, Blue Gene emphasized a massive number of interconnected, simpler processors, highlighting a different path to high performance.

This diversification in approaches to supercomputing demonstrates the breadth and depth of the field.

Trends and Directions in Supercomputing Evolution

Current trends in supercomputing are moving towards hybrid architectures, integrating CPUs and GPUs for optimized performance. Cloud-based computing and access to vast pools of computing resources are also shaping the field. These trends mirror the evolution from dedicated, on-site supercomputers to more distributed, cloud-based platforms, reflecting the increasing demand for access and flexibility in high-performance computing. The ability to scale and distribute processing resources is crucial for handling increasingly complex problems across various scientific and technological domains.

Impact on Future Generations

Future generations of computer scientists and engineers will analyze the Blue Gene project as a crucial experiment in parallel processing and the potential of massive-scale computing. The project’s legacy will likely be examined alongside other milestones in supercomputing history, contributing to a richer understanding of the evolution of this critical technology. It will be seen as a crucial step in understanding how to design, build, and manage increasingly complex computational environments.

Historical Perspective on Supercomputer Evolution

Supercomputers have evolved from early, specialized machines to the complex systems of today. The progression reflects the continuous push for higher performance and the increasing demands of scientific research and technological advancement. Early machines, like the ENIAC, laid the foundation, followed by generations of increasingly powerful and specialized systems. This evolution demonstrates the relentless pursuit of faster and more capable computational resources.

Public Perception and Reactions

The shelving of IBM’s Blue Gene project, a significant undertaking in supercomputing, undoubtedly generated a range of public reactions. Understanding these reactions requires considering the varied perspectives of stakeholders, the nature of the public discourse, and the broader implications for the perception of technological innovation. The decision to “shelf” a project of this magnitude, particularly one with such a prominent history, prompted a wave of discussion and speculation, touching on many aspects of scientific progress, corporate strategy, and public investment in research.

Potential Public Reactions

The public’s response to the Blue Gene project’s shelving was likely multifaceted, encompassing both disappointment and curiosity. A significant portion of the public, particularly those interested in scientific advancements and technological progress, might have expressed concern over the perceived setback in the field of supercomputing. Conversely, some might have viewed the decision as a pragmatic response to shifting priorities or market demands.

Concerns and Perspectives from Stakeholder Groups

Various stakeholder groups likely held distinct perspectives on the decision. Researchers and academics, deeply invested in the project’s potential, might have expressed disappointment over the lost opportunities for scientific advancement. Investors, concerned with returns on investment, may have viewed the shelving as a rational business decision. The general public, less familiar with the technical intricacies, might have focused on the implications for future technological innovation.

Public Discourse Surrounding the Decision

The public discourse surrounding the shelving of the Blue Gene project likely involved a complex interplay of perspectives. Discussions might have ranged from detailed technical analyses of the project’s limitations to broader societal reflections on the nature of technological investment. Media coverage would have played a critical role in shaping public opinion, presenting various viewpoints and prompting further discussion.

Broader Implications for the Perception of Technological Innovation

The shelving of the Blue Gene project may have influenced public perception of technological innovation. It might have highlighted the inherent risks and uncertainties associated with large-scale projects, the challenges in adapting to evolving technological landscapes, and the complexities of balancing scientific ambition with economic realities. This experience could have either instilled caution or fostered a sense of resilience among the public regarding technological pursuits.

Public Sentiment Regarding IBM’s Decision

Public sentiment regarding IBM’s decision to shelve the Blue Gene project was likely a mixture of understanding, concern, and even a degree of fascination. While some may have criticized IBM’s strategic choices, others may have acknowledged the need for adaptability and strategic pivots in the face of shifting market dynamics. Overall, the public likely exhibited a spectrum of responses, reflecting the diverse interests and perspectives of those involved.

Technological Advancements Since Blue Gene

The Blue Gene project, while groundbreaking in its time, was a specific point in the evolution of supercomputing. Significant advancements in hardware, software, and architectural paradigms have propelled the field far beyond the capabilities of even the most powerful Blue Gene systems. This shift reflects a broader trend of increasing computing power and versatility, with modern systems tackling a wider array of complex problems.The evolution of computing technology since the Blue Gene project’s inception has been marked by substantial progress across various facets.

This includes the development of new hardware materials, more sophisticated algorithms, and increased access to massive datasets. These advancements have made it possible to tackle problems that were previously considered intractable.

Hardware Evolution

The Blue Gene architecture, while innovative for its time, relied on specific technologies. Modern supercomputers leverage advancements in semiconductor technology, leading to significantly higher transistor counts and smaller feature sizes. This has dramatically increased processing power per unit of space. For instance, Moore’s Law, though not always perfectly linear, has continued to yield increasingly powerful processors with higher clock speeds and wider instruction sets.

Furthermore, the development of specialized hardware accelerators, such as GPUs and FPGAs, has enhanced performance in specific areas like parallel processing and data manipulation.

Software Advancements

Software development has kept pace with hardware evolution. Advanced programming models and libraries have emerged, allowing developers to utilize the parallel processing capabilities of modern architectures more effectively. Open-source projects have played a crucial role in facilitating collaboration and the rapid development of new software solutions. For example, the development of high-performance computing libraries like MPI and CUDA has enabled researchers to build and run applications across multiple processors.

Architectural Paradigms

Beyond advancements in individual components, new computing architectures have emerged since the Blue Gene era. Modern systems often employ heterogeneous architectures, combining CPUs, GPUs, and specialized accelerators to maximize performance in specific tasks. This departure from the homogeneous approach of Blue Gene demonstrates a greater emphasis on tailored solutions for different computational needs.

Comparison with Blue Gene

Compared to Blue Gene, modern supercomputers exhibit significantly higher processing speeds, larger memory capacities, and improved network interconnects. This enhanced performance translates to the ability to tackle significantly larger and more complex problems. For instance, the amount of data that can be processed in a given time frame has increased exponentially, enabling researchers to analyze massive datasets that were previously unmanageable.

The shift is not just about raw speed, but also about versatility and the ability to address a broader spectrum of scientific and engineering challenges.

Emerging Trends in Supercomputing

Several emerging trends are shaping the future of supercomputing. One notable trend is the increasing importance of exascale computing, which aims to achieve a quintillion (10 18) floating-point operations per second (FLOPS). This is a significant leap in computational power, promising breakthroughs in various fields. Another key trend is the growing use of artificial intelligence (AI) techniques and machine learning algorithms within supercomputing environments.

This combination of powerful computing resources and sophisticated algorithms holds the potential to revolutionize data analysis and problem-solving. Furthermore, cloud-based supercomputing resources are becoming increasingly accessible, allowing researchers to access powerful computational tools without the need for extensive on-site infrastructure.

Closing Notes

IBM’s decision to shelve the Blue Gene project marks a significant chapter in the history of supercomputing. While the project’s direct development is ending, the legacy of its advancements and innovations will undoubtedly influence future developments. The article explored the reasons for this decision, its impact on various fields, and potential alternative uses for the technology. Ultimately, the shelving of Blue Gene raises questions about the future of supercomputing and the evolving landscape of technological innovation.

The implications of this move extend far beyond IBM, affecting the entire high-performance computing ecosystem.