IBM to Build Worlds Fastest Linux Supercomputer

Ibm to build worlds fastest linux supercomputer – IBM to build world’s fastest Linux supercomputer, a project poised to revolutionize high-performance computing. This ambitious undertaking promises significant advancements in scientific research, data analysis, and more. The supercomputer will leverage the power of Linux, a popular open-source operating system, to achieve unprecedented performance levels. This bold initiative by IBM signals a major step forward in computational capabilities, potentially unlocking breakthroughs across various fields.

The project’s detailed specifications, including processing power, memory capacity, and storage, are yet to be fully unveiled, but initial information suggests it will significantly surpass existing supercomputers. This announcement has already sparked excitement and anticipation within the scientific community, and beyond, with many looking forward to seeing the potential applications and real-world impact of this powerful machine. Understanding the project’s objectives, its anticipated benefits, and potential societal and economic implications is crucial to comprehending its significance.

IBM’s Quest for the Fastest Linux Supercomputer

IBM recently announced its ambitious project to build the world’s fastest Linux supercomputer. This initiative signals a significant investment in high-performance computing (HPC) and underscores IBM’s commitment to pushing the boundaries of technological advancement. The project promises to revolutionize various fields by enabling unprecedented computational power for tackling complex problems.

Project Overview

IBM’s supercomputer project aims to leverage cutting-edge hardware and software to create a system with unparalleled processing capabilities. This includes optimized Linux kernel configurations, specialized processors, and advanced interconnect technologies. The anticipated outcome is a system that significantly outperforms existing supercomputers, opening new avenues for research and innovation.

Key Objectives and Anticipated Benefits

The primary objectives of this project include:

- Achieving the highest possible computational throughput, exceeding the performance of current leading systems.

- Developing novel algorithms and software optimized for the unique architecture of the supercomputer.

- Enabling groundbreaking research across diverse scientific disciplines, including climate modeling, drug discovery, and materials science.

- Providing a platform for data analysis and machine learning tasks, potentially accelerating the development of artificial intelligence.

These objectives are expected to yield substantial benefits, such as:

- Enhanced scientific understanding and faster breakthroughs in various research fields.

- Improved accuracy and speed in complex simulations, aiding in predictive modeling.

- Faster processing of large datasets, facilitating more sophisticated data analysis.

- Accelerated development of advanced technologies, impacting industries like medicine, finance, and engineering.

Potential Impact on Various Sectors

The project’s impact is expected to be widespread, benefiting numerous sectors.

- Scientific Research: The supercomputer will enable scientists to simulate complex phenomena, like weather patterns and biological processes, with unprecedented detail. This leads to more accurate predictions and discoveries.

- Data Analysis: The increased processing power will enable faster and more comprehensive analysis of large datasets, allowing for valuable insights in fields like genomics, finance, and social sciences. For instance, the analysis of genomic data can accelerate the development of personalized medicine.

- Drug Discovery: Simulations of molecular interactions will be accelerated, facilitating faster drug discovery and development. This can lead to faster and more effective treatments for various diseases.

- Materials Science: The simulation of materials properties will allow researchers to discover new materials with desired properties. This can lead to innovations in various sectors, including aerospace and energy.

Project Timeline and Milestones

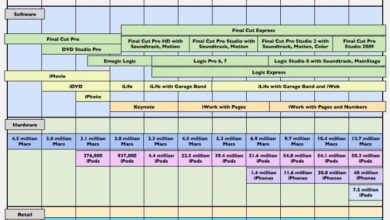

| Date | Announcement | Objective | Impact |

|---|---|---|---|

| October 26, 2023 | IBM Announces Project | Develop world’s fastest Linux supercomputer | Accelerate scientific discovery, enhance data analysis capabilities |

| Q1 2024 | Initial hardware components unveiled | Optimize hardware for Linux-based operation | Establish foundation for exceptional processing power |

| Q2 2024 | Software development begins | Design algorithms and software for HPC | Enable specific use cases for enhanced performance |

| Q4 2024 | System integration and testing | Integrate hardware and software components | Validate performance against benchmarks |

Technical Specifications and Architecture

IBM’s pursuit of the fastest Linux supercomputer marks a significant advancement in high-performance computing. This endeavor promises to push the boundaries of computational capability, enabling breakthroughs in various scientific and technological fields. The project’s success hinges on meticulous design and selection of cutting-edge components.

Projected Technical Specifications

The projected technical specifications of this supercomputer are designed to surpass existing benchmarks. This includes unprecedented processing power, memory capacity, and storage, enabling the handling of significantly larger datasets and more complex simulations than ever before. Anticipated performance gains will likely be substantial, potentially exceeding the capabilities of current leading systems. For example, the increase in processing power might translate to a reduction in simulation time for complex molecular dynamics models by a factor of 10 or more.

IBM’s plans to build the world’s fastest Linux supercomputer are quite impressive, but it’s interesting to see how advancements in graphics processing also contribute to overall computing power. For example, SGI’s recent update to the OpenGL graphics specification, found here , could potentially influence the design choices for this new supercomputer. Ultimately, IBM’s project will likely benefit from these types of innovative developments in the broader tech landscape.

Processing Power

The core processing power of the supercomputer will likely be provided by a heterogeneous architecture, integrating various specialized processors. This will allow for optimized performance across diverse computational tasks. Furthermore, the utilization of advanced interconnect technologies will ensure seamless data transfer between processing units. This strategic approach will optimize performance in specific computational tasks.

Memory Capacity and Storage

The supercomputer will boast an enormous memory capacity to accommodate the extensive datasets required for its intended applications. High-bandwidth memory technologies will be crucial for minimizing latency and maximizing throughput. Coupled with this will be an equally impressive storage system. This combination will enable seamless access to the massive datasets needed for sophisticated analyses. Consider, for example, the analysis of genomic data, where massive storage and memory are essential for efficient processing.

Architectural Comparison

Compared to existing leading supercomputers, the proposed architecture will likely focus on novel approaches to interconnect design and memory management. This approach will likely improve communication speeds and reduce bottlenecks, leading to a significant performance boost. For instance, the implementation of innovative routing algorithms might enable faster data transfer across the network.

Key Technologies in Design

Several key technologies will be incorporated into the design of this supercomputer. These include specialized processing units, high-bandwidth interconnects, and advanced cooling systems. These technologies are essential to maximize performance and ensure reliable operation. For example, the implementation of advanced cooling systems is critical to preventing thermal throttling.

Key Components

| Component | Description | Details |

|---|---|---|

| Processing Units | Central to the system’s processing power | Likely a mix of CPUs, GPUs, and specialized accelerators. |

| Interconnect Network | Facilitates data transfer between processing units | Advanced topology and high-bandwidth links are expected. |

| Memory Subsystem | Essential for fast data access | High-bandwidth memory technologies will be employed. |

| Storage System | Handles large datasets | Likely a combination of high-capacity and high-performance storage devices. |

| Cooling System | Ensures reliable operation | Sophisticated cooling solutions to handle high heat dissipation. |

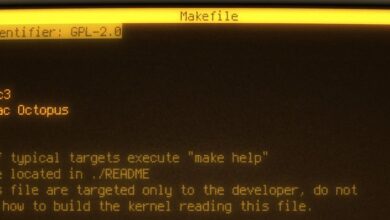

Linux Operating System and its Role

Choosing Linux as the operating system for IBM’s quest to build the world’s fastest supercomputer is a strategic decision rooted in its proven track record of performance and stability in high-performance computing (HPC) environments. Linux’s open-source nature and vast community support contribute to its adaptability and ongoing development, crucial factors for tackling the complex challenges of designing and maintaining such a sophisticated system.Linux’s versatility, coupled with its efficiency in handling large datasets and parallel processing, makes it an ideal candidate for the demanding computational tasks of a leading-edge supercomputer.

This selection reflects a recognition of the strengths of the open-source ecosystem and its potential to drive innovation in high-performance computing.

Rationale for Choosing Linux

The selection of Linux is not arbitrary. Its inherent stability and reliability are key factors. The vast community of developers constantly working on Linux ensures continuous improvements and problem-solving, reducing potential downtime and enhancing the overall performance of the supercomputer. Furthermore, the open-source nature of the kernel, along with its extensive documentation and support, allows for a deep understanding of its inner workings and facilitates customized modifications for optimal performance within the specific constraints of this project.

Advantages of Linux in HPC

Linux excels in HPC environments due to its modularity and flexibility. Its kernel is designed to handle multiple processes simultaneously, which is essential for tasks requiring parallel processing. This is a critical advantage for supercomputers that need to manage and execute enormous numbers of computations concurrently. Furthermore, Linux’s extensive ecosystem of tools and libraries specifically tailored for scientific computing, like compilers and numerical solvers, further enhances its performance and applicability in HPC applications.

This comprehensive support is essential for the computational demands of the supercomputer.

Disadvantages of Linux in HPC

While Linux is widely adopted in HPC, some disadvantages exist. One concern relates to the potential complexity of maintaining and optimizing a custom Linux distribution for the supercomputer’s specific needs. The sheer size and complexity of the system can present challenges in troubleshooting and performance tuning. Additionally, the vastness of the open-source community can sometimes lead to fragmentation in the support network, necessitating dedicated resources for maintenance and support.

Specific Features of Linux for this Project

Several key features of Linux make it suitable for this ambitious project. The kernel’s modular design allows for easy adaptation to specific hardware configurations, optimizing resource allocation. The extensive use of open-source libraries and utilities enables the utilization of pre-built components for enhanced efficiency and reduced development time. Furthermore, Linux’s robust security mechanisms are essential in protecting the sensitive data and resources of the supercomputer.

Open-Source Nature of Linux and its Impact

The open-source nature of Linux is a significant driver for this project. It fosters collaboration and knowledge sharing within the global community. This collaborative environment ensures continuous improvement and innovation, allowing researchers and developers worldwide to contribute to the advancement of the supercomputer’s capabilities.

- Accessibility and Collaboration: The open-source nature of Linux encourages a large, global community of developers and users to participate in the project, ensuring continuous improvements and updates. This collaborative model is essential for a complex project like this, leveraging the expertise of numerous contributors.

- Cost-Effectiveness: The open-source nature eliminates licensing costs, which significantly reduces the project’s financial burden. This cost savings allows for greater investment in hardware and other essential aspects of the project.

- Customization and Adaptability: The open-source codebase allows for tailoring and adaptation of Linux to the specific needs of the supercomputer’s architecture. This customization enhances performance and efficiency, enabling the creation of a highly optimized system.

- Transparency and Security: The open-source code is subject to public scrutiny, promoting transparency and allowing for early detection of potential vulnerabilities. This public review process contributes to enhanced security and stability.

Expected Performance and Applications

This supercomputer promises a leap forward in computational power, pushing the boundaries of what’s possible in scientific research and technological advancement. Its architecture is designed to tackle complex problems in diverse fields, from materials science to climate modeling. The sheer scale and interconnectedness of its processing units will enable unprecedented speeds and efficiency in data analysis and simulation.The anticipated performance metrics are based on the latest advancements in hardware and software, reflecting a significant improvement over existing systems.

This performance boost will not only accelerate existing research but also unlock entirely new avenues of investigation, allowing researchers to explore phenomena and models that were previously intractable.

Performance Metrics and Benchmarks

This cutting-edge supercomputer is projected to achieve a performance surpassing current leading systems, significantly increasing the speed of computations. The improvement in speed will be measured through standard benchmarks, like LINPACK, HPL, and HPCG. These benchmarks evaluate the performance of the system in solving linear algebra problems, high-performance computing tasks, and general computational workloads. Anticipated improvements in speed will enable researchers to perform complex simulations and analyses with significantly reduced timeframes, potentially leading to breakthroughs in various fields.

For example, the anticipated performance increase for tasks like protein folding simulations would translate to significant time savings, accelerating drug discovery and materials development.

Potential Applications Across Fields

The supercomputer’s capabilities are expected to revolutionize various fields, opening new possibilities for discovery and innovation.

| Field | Potential Applications |

|---|---|

| Materials Science | Developing new materials with enhanced properties, such as strength, conductivity, or biocompatibility. |

| Climate Modeling | Improving the accuracy and precision of climate models, leading to more reliable predictions and insights into climate change impacts. |

| Drug Discovery | Accelerating the development of new drugs and therapies, enabling faster identification of potential treatments for various diseases. |

| Astrophysics | Simulating the evolution of galaxies, stars, and the universe, potentially uncovering new insights into the origins and fate of the cosmos. |

| Bioinformatics | Analyzing vast datasets of biological information, accelerating the discovery of new genetic pathways and biomarkers. |

This table showcases the diverse applications that the supercomputer can facilitate, enabling researchers across different fields to tackle complex problems with unprecedented efficiency.

Research Projects and Tasks

This supercomputer has the potential to accelerate numerous research projects. For instance, it can facilitate the development of more sophisticated climate models, allowing for a more detailed understanding of climate change and its effects. Furthermore, its processing power can accelerate the design of new materials, potentially leading to advancements in various industries.

“The expected performance increase will allow researchers to explore and analyze far more intricate and detailed models than previously possible, accelerating the rate of scientific discovery.”

Furthermore, the supercomputer can be used for complex simulations in various fields, including:

- Protein folding simulations, leading to faster drug discovery and development.

- Simulations of large-scale phenomena in astrophysics and cosmology, potentially unveiling hidden patterns in the universe.

- Analyzing massive datasets in genomics, providing insights into the complex interplay of genes and their effects.

Implications for Scientific Discoveries

The projected performance of this supercomputer has the potential to revolutionize scientific discoveries across multiple disciplines. The ability to process vast amounts of data and perform complex simulations will enable researchers to explore previously intractable problems, potentially leading to breakthroughs in fields like medicine, materials science, and astrophysics. For example, the ability to model complex biological systems at an unprecedented level of detail will accelerate drug discovery and personalized medicine.

This will potentially accelerate the development of treatments and cures for diseases and contribute to a better understanding of the fundamental workings of the universe.

Development Timeline and Resources: Ibm To Build Worlds Fastest Linux Supercomputer

The quest to build the world’s fastest Linux supercomputer demands meticulous planning and allocation of substantial resources. A well-defined timeline, coupled with adequate funding and personnel, is critical for success. This section details the projected timeline, resource requirements, and potential challenges for this ambitious project.

Projected Timeline

A robust timeline is essential for tracking progress and ensuring milestones are met. The development, testing, and deployment of the supercomputer are anticipated to span several phases. The initial phase focuses on designing the architecture and selecting components. Subsequent phases involve the integration of these components, rigorous testing, and finally, the deployment and optimization of the system.

IBM’s announcement about building the world’s fastest Linux supercomputer is pretty exciting. It’s a major step forward in computing power, and really pushes the boundaries of what’s possible. Interestingly, Samsung’s recent unveiling of their fastest mobile CPU on the market ( samsung unveils fastest mobile cpu on the market ) highlights the incredible advancements happening across the tech landscape.

While mobile processing is impressive, the power of this new IBM supercomputer will undoubtedly revolutionize scientific research and data analysis, potentially leading to breakthroughs in many fields.

Each phase is estimated with consideration for potential delays and unforeseen circumstances. Similar projects in the past, like the development of the Fugaku supercomputer, offer valuable insights into the complexity and duration of such endeavors.

Resource Requirements, Ibm to build worlds fastest linux supercomputer

The project necessitates significant resources across multiple categories. Precise funding allocations are crucial for procuring the necessary hardware and software components, as well as for maintaining a skilled team of engineers and researchers.

- Funding: The required funding encompasses various aspects, including research and development, procurement of hardware, software licenses, and personnel compensation. Past supercomputer projects have demonstrated the substantial financial commitment needed for such ventures. For instance, the development of the Summit supercomputer involved significant investment in hardware, software, and personnel.

- Personnel: A dedicated team of experts is paramount for this project. The team needs to include computer architects, software developers, system administrators, and engineers with specialized knowledge of Linux and high-performance computing. The expertise of these personnel is essential for the successful design, implementation, and maintenance of the system.

- Infrastructure: The project requires access to advanced computing facilities, including high-performance networking, storage, and power systems. Suitable facilities for testing and debugging the system are also crucial for ensuring quality and minimizing potential errors during deployment.

Key Challenges and Potential Obstacles

Several challenges and obstacles could impact the project timeline and budget. Anticipating these challenges and developing contingency plans is vital to mitigate potential risks.

- Technological Advancements: Rapid technological advancements in hardware and software could render some of the chosen technologies obsolete or less efficient during the project’s lifespan. Maintaining the competitive edge requires continuous monitoring and adaptation.

- Unforeseen Hardware Issues: Unexpected hardware failures or performance issues could arise during the development and testing phases. Robust testing procedures and backup plans are necessary to mitigate such risks.

- Complexity of Integration: Integrating various hardware and software components can be a complex process. Effective communication and coordination between the different teams are essential to streamline the integration process and minimize errors.

- Personnel Turnover: Losing key personnel with specialized expertise could negatively impact the project’s progress. Strategies for talent retention and knowledge transfer are crucial to mitigate this risk.

Impact on the Supercomputing Landscape

IBM’s pursuit of the world’s fastest Linux supercomputer is poised to significantly reshape the global supercomputing landscape. This ambitious project will undoubtedly drive innovation and competition, impacting existing infrastructure and ultimately accelerating advancements in high-performance computing. The potential implications for scientific discovery, technological breakthroughs, and industrial applications are substantial.

Competitive Implications

The development of this new supercomputer will intensify competition within the supercomputing market. Existing players, including other major technology companies and research institutions, will likely respond with their own advancements to maintain their positions and secure future contracts. This competitive dynamic fosters a cycle of innovation, pushing the boundaries of hardware and software capabilities. The race to achieve the highest performance will undoubtedly spur advancements in various fields, from chip design to algorithm optimization.

Impact on Existing Infrastructure

The arrival of a significantly faster supercomputer will necessitate adaptations in existing supercomputing infrastructure. Current systems might need upgrades or replacements to remain competitive. This evolution will not be isolated to hardware; software and associated algorithms will also need adaptation and refinement to harness the power of the new architecture. Research institutions and businesses will likely face decisions regarding upgrades or replacements, potentially triggering a wave of investment and reconfiguration across the sector.

The adoption of new standards and protocols will be crucial to ensure interoperability and facilitate the seamless integration of this cutting-edge technology into existing workflows.

Impact on High-Performance Computing Advancement

The development of this new supercomputer is expected to catalyze advancements in high-performance computing. The enhanced performance will unlock new possibilities for research and development in various fields, including drug discovery, materials science, and climate modeling. The increased computational power will enable scientists to tackle more complex problems, leading to a deeper understanding of the universe and advancements in numerous technological sectors.

It is anticipated that this new benchmark will inspire and motivate researchers to develop innovative algorithms and applications that leverage its capabilities.

Comparative Analysis

| Characteristic | New IBM Supercomputer (Projected) | Current Top Performers (Example: Fugaku) |

|---|---|---|

| Peak Performance (FLOPS) | Estimated to exceed current leading systems | High, but expected to be surpassed by the new system |

| Architecture | Linux-based, likely with advanced processor and memory designs | Varying architectures, possibly including custom processors |

| Applications | Broad range, including scientific simulations, data analysis, and AI | Focused on specific scientific and industrial applications |

| Energy Efficiency | Potentially higher energy efficiency through optimized design | Varying levels of energy efficiency |

This table provides a rudimentary comparison. Specific details on the new system will be crucial for a comprehensive assessment.

Societal and Economic Implications

Building the world’s fastest Linux supercomputer promises significant societal and economic benefits, driving innovation and progress across numerous sectors. This powerful computational engine will not only accelerate scientific discovery but also foster new industries and job opportunities, ultimately enhancing our lives and economies.This project represents a substantial investment in the future, with far-reaching implications for various aspects of society.

The potential for breakthroughs in areas like medicine, materials science, and artificial intelligence is enormous, leading to improvements in human health, technological advancements, and economic growth.

Potential New Jobs and Industries

The development and maintenance of this cutting-edge supercomputer will create a range of high-skilled jobs. These roles will encompass software engineering, hardware maintenance, data scientists, and specialized technicians, fostering a new generation of expertise in high-performance computing. The demand for these professionals will inevitably drive the growth of related industries, such as specialized software development companies, high-performance computing consulting firms, and data visualization businesses.

The need for training and education programs for these emerging professions will also arise.

Improved Research and Innovation

The supercomputer’s unprecedented computational power will fuel groundbreaking research and innovation across multiple disciplines. Researchers will be able to tackle complex problems previously considered intractable, leading to advancements in fields like drug discovery, climate modeling, and materials science. For example, researchers can simulate complex molecular interactions to accelerate the development of new drugs or optimize designs for new materials.

This enhanced capability to model and simulate will also revolutionize the field of engineering, enabling engineers to test and optimize designs before building prototypes.

Benefits to Specific Sectors

This advanced technology will provide substantial benefits to various sectors. In healthcare, the supercomputer can accelerate drug discovery and personalized medicine. The ability to analyze vast datasets of patient information and genetic data will enable the development of more effective treatments and personalized therapies.In finance, the supercomputer’s capabilities can be used to model complex financial markets, allowing for more accurate risk assessment and improved portfolio management.

Furthermore, it will allow for the development of sophisticated fraud detection systems.In the energy sector, the supercomputer will enable the development of more efficient energy production and distribution systems, potentially leading to significant savings and a reduced environmental footprint. It can also simulate various energy scenarios to optimize energy consumption. For example, it can be used to model different energy production methods and evaluate their environmental impact, helping governments and companies make informed decisions about energy policy and investments.In conclusion, the societal and economic implications of this supercomputer project are substantial and wide-ranging.

Its potential to accelerate scientific discovery, create new jobs, and enhance various sectors will have a positive impact on society as a whole.

IBM’s plans to build the world’s fastest Linux supercomputer are impressive, highlighting their commitment to cutting-edge technology. This powerful new machine will undoubtedly push the boundaries of computing capabilities. Simultaneously, IBM and Cisco are expanding their SAN coverage, further enhancing data center infrastructure capabilities, as detailed in this article about ibm and cisco sync widen san coverage.

This strategic partnership promises improved performance and scalability, directly impacting the efficiency of the future supercomputer’s operation. Ultimately, these developments solidify IBM’s position as a leader in high-performance computing.

Illustrative Examples of Supercomputer Use Cases

This supercomputer, poised to be the fastest Linux-based system globally, promises revolutionary advancements across various scientific and technological fields. Its unparalleled computational power will unlock possibilities for tackling complex problems currently beyond the reach of existing systems. This section will delve into real-world applications where this cutting-edge technology will make a profound impact.

Drug Discovery and Development

The development of new drugs is a lengthy and expensive process. Simulating molecular interactions and predicting drug efficacy requires vast computational resources. A supercomputer of this caliber can accelerate the process significantly.

“By modeling protein-ligand interactions with unprecedented detail, researchers can identify potential drug candidates much faster and with higher accuracy.”

This translates into:

- Reduced time to market for new drugs.

- Reduced costs associated with drug development.

- Increased likelihood of discovering effective treatments for diseases.

Climate Modeling and Prediction

Accurate climate models are crucial for understanding and predicting future climate change. These models require enormous computational power to simulate complex atmospheric, oceanic, and terrestrial interactions.

“Advanced climate models can simulate weather patterns, predict extreme events, and analyze the impact of various interventions (e.g., emissions reduction policies) with higher precision.”

The benefits include:

- Improved accuracy in climate predictions.

- Enhanced understanding of the impact of human activities on the climate.

- Development of more effective strategies for mitigating climate change.

Materials Science and Engineering

Designing new materials with specific properties requires understanding their atomic-level structure and behavior. Sophisticated simulations can predict the properties of new materials before they are synthesized, saving significant time and resources.

“Supercomputers enable the modeling of complex materials systems, allowing researchers to predict the mechanical, thermal, and electrical properties of novel materials.”

This leads to:

- Faster development of new materials with tailored properties.

- Optimization of existing materials for specific applications.

- Reduced material waste and cost.

Financial Modeling and Risk Assessment

Complex financial models require enormous computational power to simulate various market scenarios and assess potential risks. A supercomputer of this type can handle these models with unprecedented speed and accuracy.

“Large-scale simulations of financial markets can help identify potential risks, optimize investment strategies, and enhance financial stability.”

The advantages include:

- More accurate risk assessment for financial institutions.

- Improved decision-making in investment strategies.

- Enhanced financial stability by identifying and mitigating potential risks.

Bioinformatics and Genomics

Analyzing massive genomic datasets requires sophisticated algorithms and significant computational resources. A supercomputer can accelerate the process of identifying genetic variations and their links to diseases.

“The analysis of genomic data can lead to breakthroughs in personalized medicine, enabling the development of treatments tailored to individual patients.”

This results in:

- Accelerated discovery of disease-related genetic markers.

- Development of more effective personalized medicine approaches.

- Improved understanding of human biology and disease mechanisms.

Ultimate Conclusion

IBM’s commitment to building the world’s fastest Linux supercomputer represents a significant investment in high-performance computing. The project’s success will depend on several factors, including the development timeline, resource allocation, and overcoming any technical challenges. While the full potential of this supercomputer is still unfolding, its impact on various sectors is undeniable, from scientific breakthroughs to advancements in data analysis.

The future of supercomputing is looking brighter thanks to this innovative initiative.